In the National Defense Authorization Act, Congress directed the National Institute of Standards and Technology (NIST) to work with public and private organizations to create a voluntary risk management framework for trustworthy artificial intelligence systems. Following up on that Congressional directive, NIST has released Artificial Intelligence Risk Management Framework 1.0 (AI RMF 1.0) to “offer a resource to the organizations designing, developing, deploying, or using AI systems to help manage the many risks of AI and promote trustworthy and responsible development and use of AI systems.” And the framework could not be any timelier since the discourse surrounding AI recently reached an inflection point following the introduction of ChatGPT, an advanced, conversational AI chatbot. While ChatGPT has made AI, and its potential, more tangible to the masses, AI is already a pervasive and heavily utilized technology in virtually every industry. In recognition of the seemingly limitless potential of AI, but also the risks that come along with it, governmental authorities across the world, over the last several years, have addressed calls for regulation of AI by introducing (and in some cases, passing) legislation (e.g., the EU’s proposed AI Act) and releasing voluntary AI governance standards (such as the White House’s AI Bill of Rights). In addition, dozens of industry bodies and standards-setting organizations have introduced AI standards, guidelines, and frameworks, some of which are industry/technology agnostic, and others that are industry or technology-specific.

The AI RMF 1.0 was developed by NIST in collaboration with stakeholders from the U.S. government, industry, and academia over a two+ year period, following a concept paper (December 2021), and drafts of the RMF (March and August 2022, respectively).

The AI RMF 1.0 is a voluntary framework that organizations can use to address risks in the design, development, use, and evaluation of AI products, services, and systems. The AI RMF 1.0 can serve as a useful guide to organizations in their development and maintenance of an AI governance program, by helping introduce and provide some high-level answers to threshold questions:

-

How is an AI System defined? The AI RMF 1.0 defines “AI System” as “an engineered or machine-based system that can, for a given set of objectives, generate outputs such as predictions, recommendations, or decisions influencing real or virtual environments. AI systems are designed to operate with varying levels of autonomy.”

-

What risks does AI present? The AI RMF 1.0 provides a framework on how to address, document, and manage AI risks and potential negative impacts. AI RMF 1.0 introduces three categories of potential harms related to AI systems for organizations to consider while framing relevant risks, including: (1) harm to people; (2) harm to organization; and (3) harm to an ecosystem.

-

Who should be involved in AI governance…and where might privacy and legal departments be involved? The AI RMF 1.0 discusses and provides examples of the AI “actors” (i.e., stakeholders) that will need to be involved in AI governance. Like privacy and cybersecurity compliance and governance, the NIST framework acknowledges that “AI Actors will represent a diversity of experience, expertise, and backgrounds and comprise demographically and disciplinarily diverse teams.”

-

What are the characteristics of a “trustworthy” AI system? Also referred to as “responsible” or “ethical” AI, the RMF discusses in detail the following characteristics: valid and reliable, safe, secure, resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair with harmful bias.

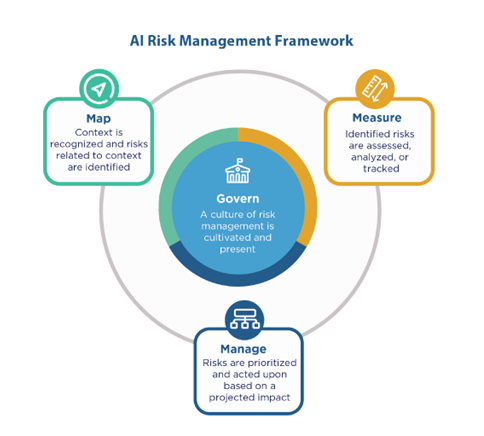

Part 2 of the framework describes the “Core” of the AI RMF 1.0, which is composed of four functions: Govern, Map, Measure, and Manage (depicted in the image below). Alongside the AI RMF 1.0 NIST released a companion NIST AI RMF Playbook, AI RMF Explainer Video, an AI RMF Roadmap, AI RMF Crosswalk, and various Perspectives. The crosswalk is a particularly informative document that compares the AI RMF 1.0 with other frameworks, such as the White House’s AI Bill of Rights and the OECD Recommendation on AI.

We have significant experience advising companies in a variety of industries on AI and AI-related issues, including the creation of AI governance programs and boards, development of AI technologies in-house and acquisition licensing of AI technologies from vendors, AI and algorithmic impact assessments, and otherwise. Notably, we have also created a crosswalk between the AI RMF and the proposed EU AI Act. We also have deep experience advising global clients across industry verticals on the privacy-related impacts of AI, including automated decision-making and profiling and other privacy compliance issues (see our detailed blog post on ADM and profiling here).

If you are interested in learning more about our experience with AI governance and compliance, contact one of the authors or your Squire Patton Boggs relationship attorney. If you are planning on attending the IAPP’s Global Privacy Summit in April, consider attending Kyle Fath’s session on Building an AI Framework and Governance Program.

This will not be the last time we hear from NIST this year. Indeed, NIST is set to host several public workshops in February to discuss changes to NIST’s Cybersecurity Framework, and we will be here to guide you through those changes, too.

/>i

/>i